This is how to render hyper-realistic foliage in OpenGL!

Real-time subsurface scattering is one of the most powerful graphical upgrades you can add to your game. And it’s why Star Wars Battlefront’s environments look so stunning. I’m going to explain all the science, textures, and shader code, as well as share AAA industry secrets you need, to take your graphics to the next level.

What Is Subsurface Scattering

Subsurface scattering is the process in which light enters a material and gets refracted in random directions. Typically, light gets absorbed or reflected. This changes the light’s spectrum, and it’s how everything gets its colour.

However, some materials also refract light, causing it to bend and collide with more atoms, losing energy each time. For high-density materials like metal, the refracted light never makes it back to the surface. But when it comes to plants, the light escapes. And because it has less energy, it now has a different colour. That’s why white light passing through green leaves ends up looking yellow.

This is what makes plants in modern AAA games look so realistic. But getting it to work in real-time has left many developers banging their heads against the wall.

Implementing Subsurface Scattering in OpenGL

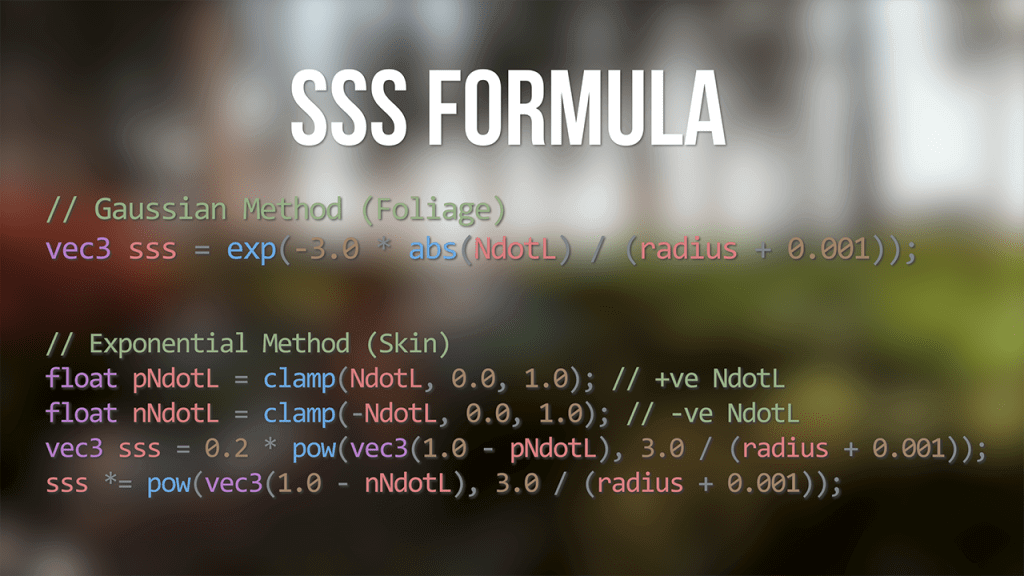

The actual math behind real-time subsurface scattering isn’t that complicated. It essentially boils down to a simple Gaussian function for vegetation or an Exponential function when rendering skin.

There are other models out there, but these provide a solid balance between graphical fidelity and performance. However, calculating the inputs of these functions is where we need to dive down the rabbit hole.

Subdermal Maps

Let’s start with Sudermal maps. Instead of simulating the energy loss from light colliding with atoms, a subdermal map stores the colour of white light after it’s been refracted and escapes.

Since each material has different density and properties, light reacts differently each time. So, accurately generating these textures requires a bit of artistic finesse. Or you can cheat by simply increasing the saturation of the albedo map inside the fragment shader.

After sampling the subdermal map inside the shader, you just need to multiply it by the colour of the incoming light and its strength factor.

Thickness Maps

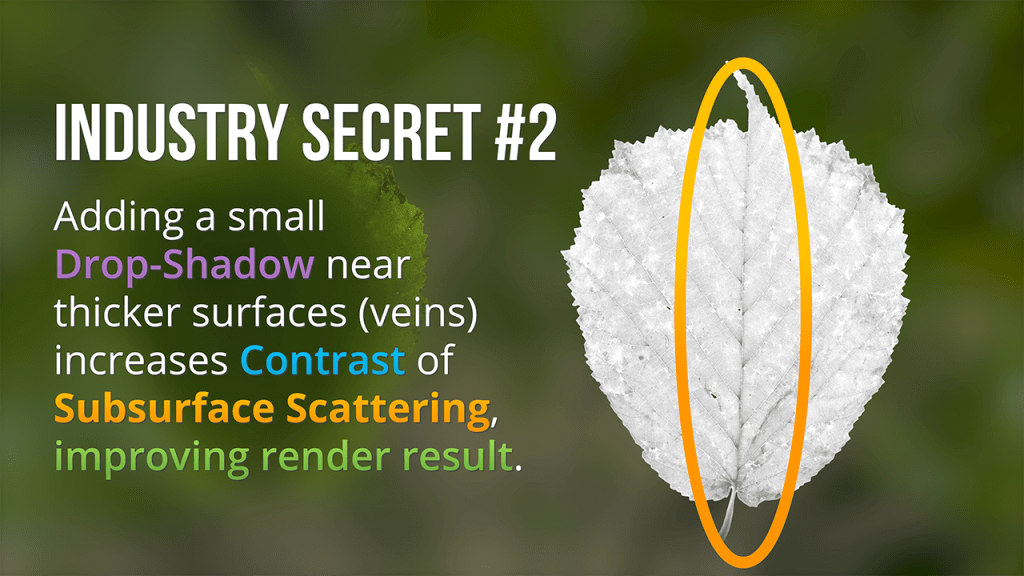

The thickness map is where a lot of detail can be added. It’s a greyscale texture that provides depth information that’s not present in the 3D geometry of a mesh. Looking again at tree leaves, these are almost always rendered as a flat plane. However, with a thickness map, we can reveal all the veins within each leaf as light shines through.

Making thickness maps is simple enough. The darker the value, the thinner the surface. So, for tree leaves, we want the veins to be lighter than the body. But in some cases, a thickness map isn’t needed. Right now, Bright Life is only generating 3D mushrooms, which absorb almost all the light passing through them. Therefore, there’s no point in wasting time making a thickness map since the added details won’t show in the final result.

Instead, it’s far quicker and memory efficient to just use a floating point value set between zero and one. And since this can be adjusted at run time, it also opens the door to interesting effects.

Scatter Maps

The last texture is the scatter map. And as the name suggests, it contains all the information we need to scatter the light inside a material. Accurately simulating light diffusion patterns can easily set your computer on fire. So, to keep things running in real-time, we can bake approximations into this texture.

There are a bunch of models that have been developed over the years. But since we’re interested in speed, you can just use another industry shortcut. Take the sudermal map, boost the saturation even further, shift the hue down slightly and apply a Gaussian blur filter.

The intensity of the blur dictates the amount of scattering opening the door to more artistic liberty to match a particular art style without being bound by the laws of physics.

Calculating The Subsurface Radius

With the textures completed, it’s time to start bringing everything together by calculating the subsurface radius.

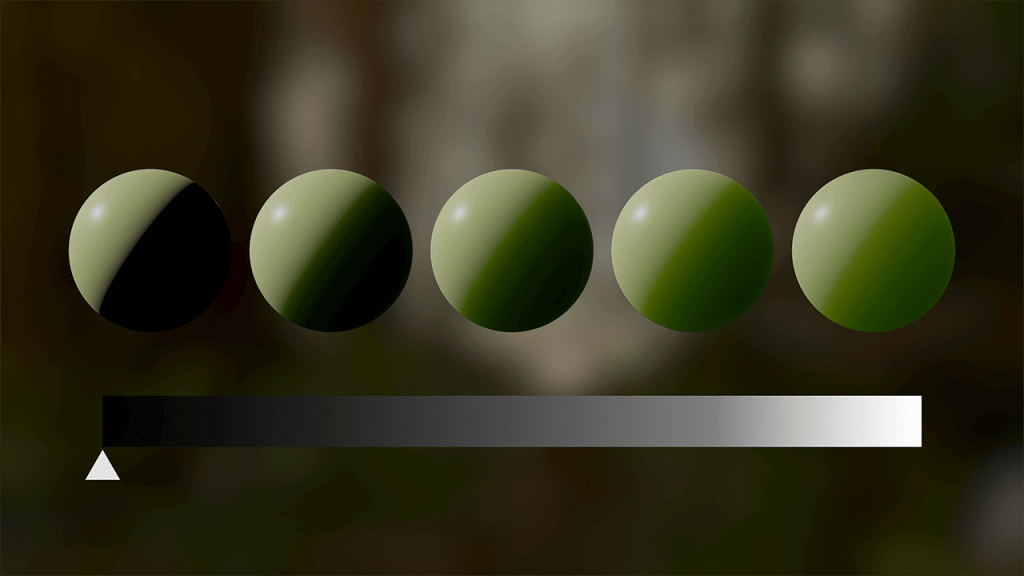

The radius determines how far light can penetrate through a material. A value of zero equates to infinite thickness, resulting in no subsurface scattering. That’s the opposite of how a thickness map works, so we need to take the inverse.

However, while zero is a hard boundary, there’s no actual upper limit. And boosting the radius beyond one will amplify the strength of the subsurface effect.

Now, the scatter map enters the equation. Currently, the radius is constant in all three dimensions, creating a perfect sphere. But by multiplying the radius by the sampled scatter value, we morph it into a deformed blob, scattering light by different amounts along the axes.

Calculating Geometry Depth Without Ray Tracing

The last radius factor is the geometry trace, which is the distance light has travelled through a 3D object. Unless you’re using static lighting, the geometry trace cannot be baked into a texture. It HAS to be calculated in real time, which is a serious problem because ray tracing is really slow.

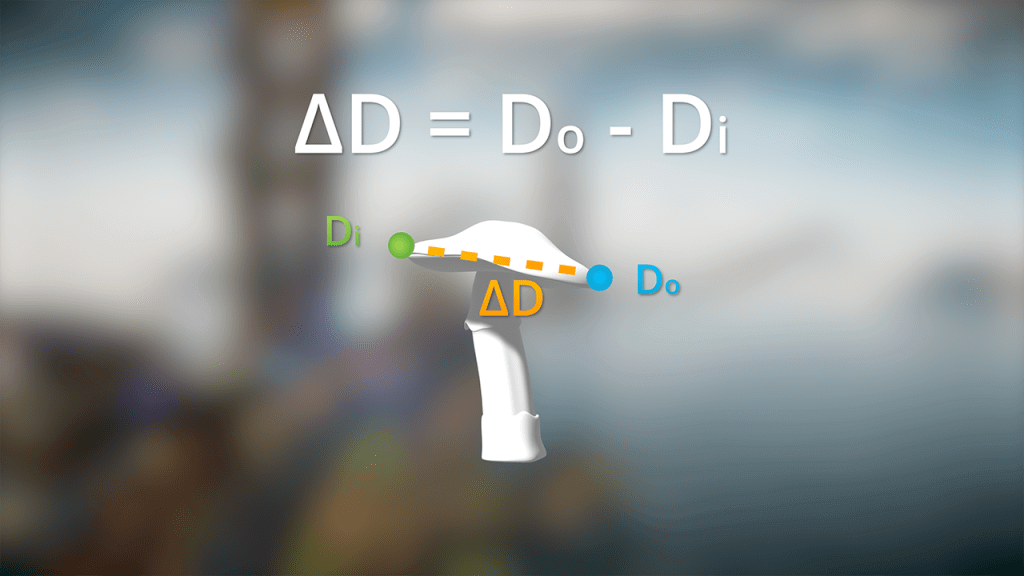

This is where we start thanking a man named Christophe Hery. While working at Industrial Light & Magic, he came up with a brilliant method to calculate geometry depth without any ray tracing whatsoever. In fact, it’s so elegant that despite being over 20 years old, it’s still widely used by AAA studios today.

By taking the depth of a fragment with its equivalent pixel inside a shadow map, you end up with two positions – the entry and destination points of light. And by subtracting one from the other, you get a close approximation to the distance light has traveled through a mesh’s geometry.

Leveraging Shadow Maps

Bright Life only uses one directional light source, so for this example, I’ll demonstrate the process with directional shadows. However, with a bit of tweaking, Hery’s method will also work with spot and point lights.

Since directional light travels in parallel, I need to use an orthographic projection matrix. And the view matrix will simply be a LookAt function following the light’s direction. Multiplying these two matrices together gives you the light space transformation matrix, which can be sent directly to the shadow map shader.

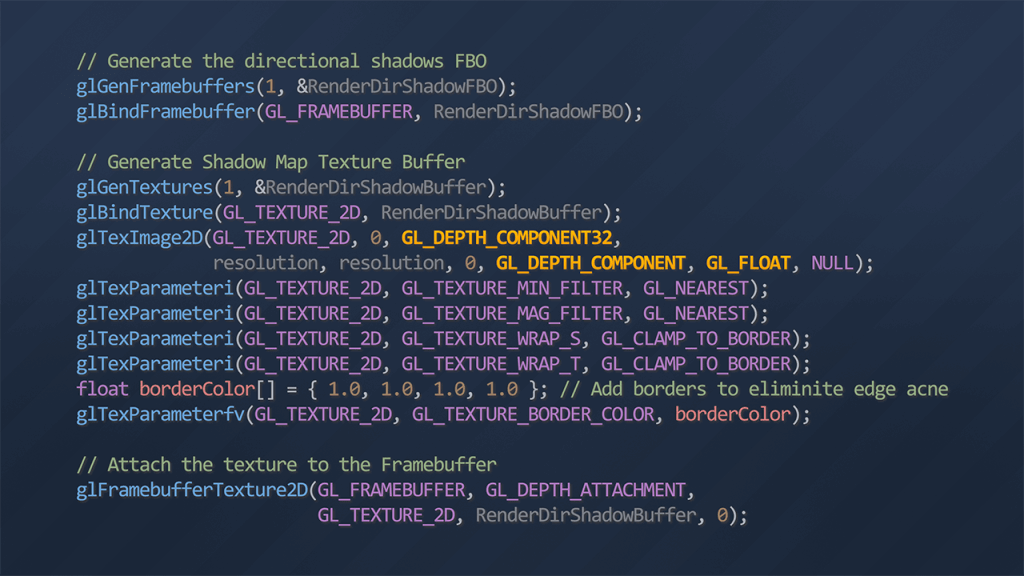

We need to store the shadow map in a texture for later. Therefore, it’s time to create a new framebuffer.

I explained how framebuffers work in the last devlog. But this time, we’re doing things a bit differently. The attached texture will use the GL_DEPTH_COMPONENT format with floating-point precision instead of the usual GL_RGB. That’s because we only want to store high-precision depth values. And to improve performance the DrawBuffer and ReadBuffer functions can be set to None.

All that’s left is to bind the new framebuffer and draw the scene using the shadow map shader. The shader itself uses the same structure as the PBR shader. But it can be massively simplified. We only need to calculate the light space position of each vertex. And since there’s no colour, the fragment shader can be left completely blank.

Shadow maps can be a bit tricky. So, if you’re struggling, there is an excellent article written by Joey De Vries that explains the process in detail.

Finalising The Geometry Trace

Now, it’s time to render the scene as normal, passing on the shadow map and light space matrix that was just calculated.

In the vertex shader, the light space position of each vertex needs to be calculated and passed along. Then, it’s time to build the geometry trace function. To sample the shadow map, we need some UV coordinates.

Using the light space vertex position we just calculated, we can perform a perspective divide. This transforms the position from clip space into normalised device coordinates, which can then be shifted to be between zero and one.

By sampling the shadow map at these coordinates, we now have the light entry point. To get the destination point, it’s just a matter of taking the Z component from the light space vertex position. And subtracting the entry from the destination returns the distance that light has travelled through the geometry.

The hard bit is officially over. But we’re not quite finished yet. If you try to preview this distance, everything looks black. Instead we should be seeing a gradient.

The problem is that sampled depth values are based on the projection matrices near and far planes. As such, a few centimetres of depth can be an incredibly small number. So, it needs to be scaled up with a clamped exponential function.

Note that we’re also taking the inverse since the geometry trace needs to tend towards zero the further light has travelled. That’s really important, and you’ll see why in a moment.

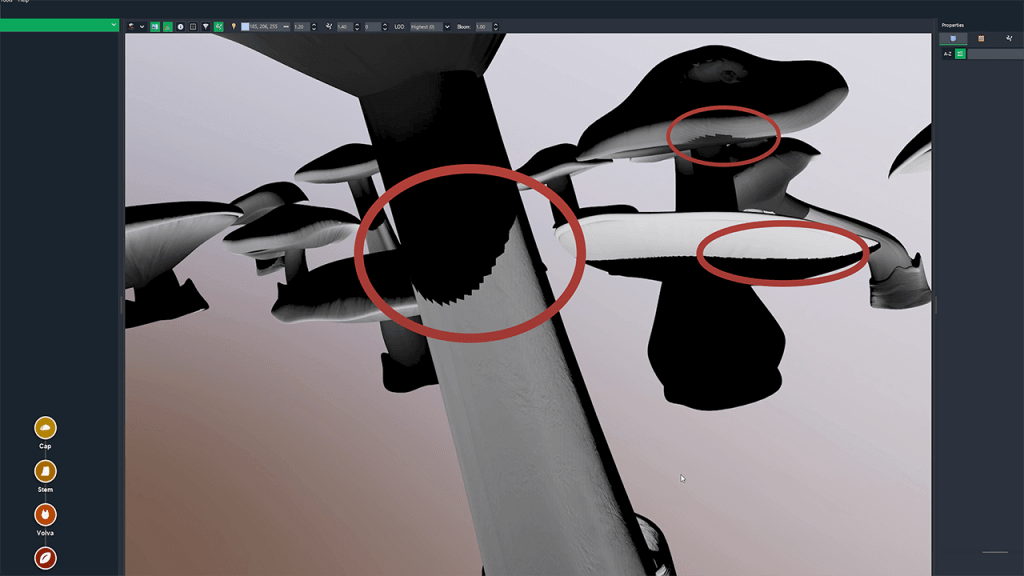

Now, if we preview the geometry trace, everything is finally working. But, if we zoom in, it’s clear we have some horrible jagged edges appearing due to the shadow map’s limited resolution. So, let’s use some industry knowledge to quickly fix that.

Fixing Artefacts

First off, let’s eliminate any projection artefacts that can appear along the edges of the mesh. In the vertex stage of the shadow map shader, slightly scale each vertex along its normal vector. Now, when sampling the limited-resolution shadow map, pixels from the background will no longer bleed onto the mesh and create artefacts.

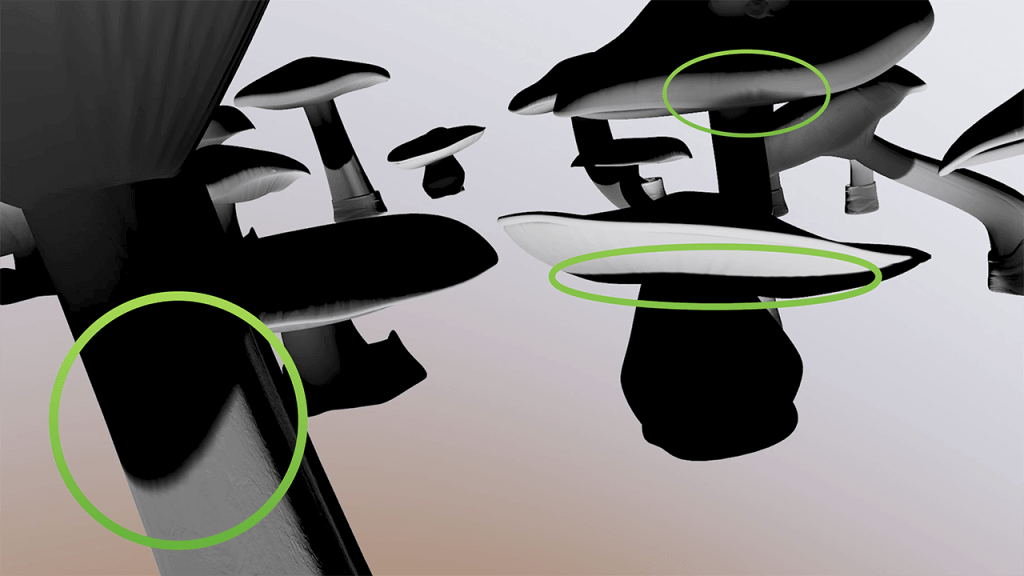

Next, let’s get rid of those nasty jagged edges. Instead of sampling the shadow map once, we can sample it multiple times at different offsets to get a weighted average. To take it further, let’s introduce some bilinear filtering.

Normally, OpenGL does this for us, and that’s why textures don’t look pixelated when a 3D model is scaled up massively. But sadly, OpenGL won’t do this for floating-point textures. So, we have to do it ourselves inside the fragment shader.

With those changes made, the jagged edges are almost entirely gone, even though the resolution of the shadow map hasn’t changed.

Source Code

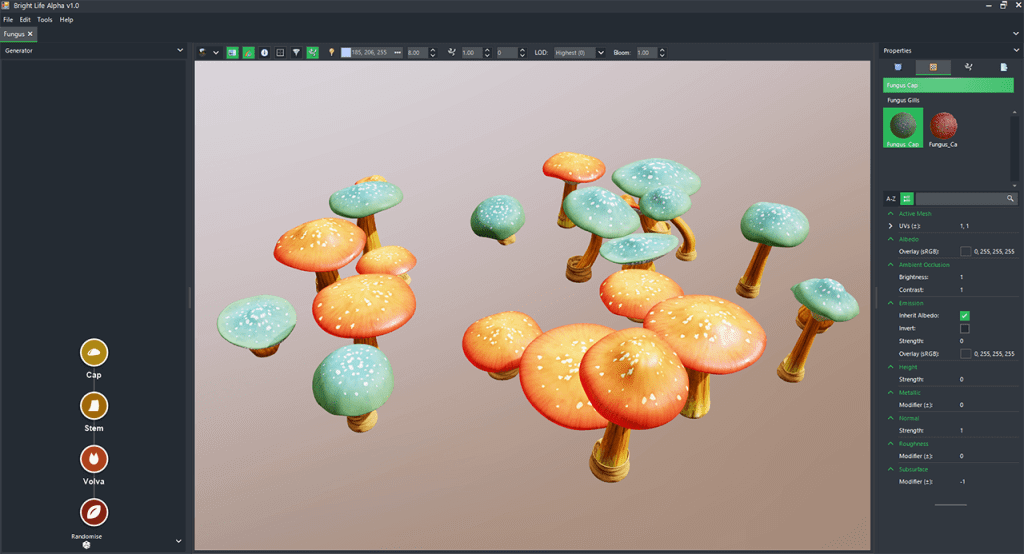

The geometry trace is now complete and with it, so is the radius. Now, all we do is take the output of the subsurface scattering function and add it to the diffuse component of the PBR shader. And there you have it, real-time subsurface scattering.

Despite relying on a lot of approximation, the end result is a massive improvement. However, this method does have some limitations. Most prominent is that it doesn’t tend to play nicely with 3D models that have holes. In practice, most games don’t encounter such situations very often. But if you do, take a look into a process called Depth Peeling.

With subsurface scattering and other advanced rendering techniques all finished, Bright Life is delivering increasingly realistic renders for artists. However, there’s still plenty of work to do before this 3D plant generator is production-ready. And if you want to help speed things along you can support the channel by joining the Patreon.

All members can download Bright Life right now as well as receive continuous updates and bug fixes. It’s also where you’ll find all the subsurface shader functions, which you can access for free.

Let me know what you want to see next in the comments below, and remember to subscribe to get your free copy of Bright Life once it’s finished. Thanks for watching, and I’ll see you in the next one.